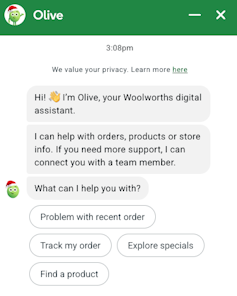

Woolworths has introduced a partnership with Google to include agentic synthetic intelligence into its “Olive” chatbot, beginning in Australia later this yr.

Till now, Olive has largely answered questions, resolved issues and directed consumers to data.

Quickly, Olive will be capable to do extra: planning meals, decoding handwritten recipes, making use of loyalty reductions and putting recommended gadgets instantly right into a buyer’s on-line purchasing basket.

Woolworths

Woolworths says Olive won’t full purchases mechanically, and prospects will nonetheless must approve and pay for orders.

This distinction is necessary, however dangers understating what’s truly altering. By the point a consumer reaches the checkout, lots of the substantive selections about what to purchase could have already got been formed by the system.

From helper to determination maker

Essentially the most vital change for consumers is how selections can be made in the course of the purchasing course of – and who makes them.

Google describes its new system as a “proactive digital concierge” that understands buyer intent, causes by means of multi-step duties, and executes actions.

Main United States retailers, together with Walmart, Kroger and Lowe’s, are adopting the identical expertise. The transfer types a part of a broader technique by Google to advertise agent-based commerce throughout retail.

In sensible phrases, if Woolworths consumers give their permission, the brand new Google Gemini model of Olive will more and more assemble purchasing baskets autonomously.

For instance, a buyer who uploads a photograph of a handwritten recipe might obtain a accomplished record of elements, reflecting product availability and reductions.

Alternatively, a buyer who asks for a meal plan might obtain a ready-made basket primarily based on previous preferences, present promotions and native inventory ranges.

This basically modifications the position of the consumer.

As an alternative of actively deciding on merchandise by means of searching and comparability, consumers will more and more assessment and approve alternatives made for them. Choice-making shifts away from the person in direction of the system.

This delegation could seem minor when thought-about in isolation. Over time, nevertheless, repeated delegation shapes habits, preferences and spending patterns. That’s the reason this new change deserves cautious scrutiny.

Nudging by design

Woolworths presents Olive’s expanded position as a sensible comfort to save lots of effort and time, whereas growing personalisation. These claims usually are not incorrect, however they obscure an necessary level.

Agent-based purchasing methods are designed to nudge behaviour in ways in which differ markedly from conventional promoting.

When Olive highlights discounted merchandise or promotional gives for a consumer, it doesn’t depend on impartial standards. As an alternative, its priorities replicate pricing methods, promotional priorities and industrial relationships – not an goal evaluation of the buyer’s pursuits.

As soon as such judgements are embedded inside an AI system that guides purchasing selections, nudging turns into a part of the construction of selection, fairly than a visual layer positioned on high of it.

This can be a significantly highly effective type of affect. Conventional promoting is recognisable. Customers know when they’re being persuaded and may low cost or ignore it.

Algorithmic nudging, in contrast, operates upstream. It shapes which choices are surfaced, mixed, or omitted earlier than the consumer encounters them. Over time, this affect turns into routine and troublesome to detect.

Agent-based purchasing additionally means AI does the searching, evaluating costs and weighing options for us. Customers are more and more introduced with curated outcomes that invite acceptance, fairly than deliberation.

As fewer choices are made seen and fewer trade-offs are explicitly introduced, comfort begins to exchange knowledgeable selection.

For these causes, it might be fallacious to deal with agent-led purchasing as worth impartial. Programs designed to extend loyalty and income shouldn’t mechanically be assumed to behave in the very best pursuits of shoppers, even after they ship real comfort.

Unresolved information privateness questions

Information privateness is a good larger concern.

Grocery purchasing reveals way over model choice. Meal planning can disclose well being situations, dietary restrictions, cultural practices, non secular observance, household composition and monetary pressures. When an AI system manages these duties, home life turns into legible to the platform that helps it.

Google has said buyer information utilized in its system is just not used to coach fashions and that strict security requirements apply.

These assurances are necessary, however they don’t resolve all considerations. It’s not but clear how lengthy family information is retained, the way it’s aggregated, or how insights from such information are used elsewhere.

Consent gives restricted safety on this context. It’s usually granted as soon as, whereas profiling and optimisation proceed over time. Even with out direct information sharing, inferences drawn from family behaviour can form system efficiency and design.

These privateness dangers don’t rely on misuse or information breaches. They come up from the rising intimacy of knowledge used to form behaviour, fairly than merely file it.

Comfort shouldn’t finish the dialog

For a lot of households, Olive’s expanded capabilities will save time, cut back friction and enhance the purchasing expertise.

However when AI strikes from help to motion, it reshapes how decisions are made and the way a lot company folks quit.

This shift ought to immediate a broader dialogue about the place comfort ends and client autonomy begins. When AI methods begin making on a regular basis selections, we should ask whether or not shoppers retain significant management over their decisions.

Transparency about how suggestions are generated, limits on industrial incentives shaping agent behaviour, and limits on family information use needs to be handled as baseline expectations, not non-compulsory safeguards.

With out such scrutiny, agent-led purchasing dangers quietly reconfiguring client behaviour in methods which can be troublesome to detect – and even more durable to reverse.![]()

This text is republished from The Dialog below a Artistic Commons license. Learn the authentic article.